The new BLAKE3 hazmat API

by Rüdiger KlaehnIn iroh-blobs, we are using the BLAKE3 hash function for content-addressing. To allow verified streaming, we need to access some internals of the hash function. For the last 18 months we have maintaned a fork of the BLAKE3 crate to allow us to access some internals of the hash function. With the latest release of BLAKE3, there is a new hazmat API that allows us to retire this fork.

This blog post goes into a lot of detail on the previous guts API, the new hazmat API, and how we use these APIs in blobs. It will be of interest to other advanced users of the BLAKE3 hash function.

Short summary of BLAKE3

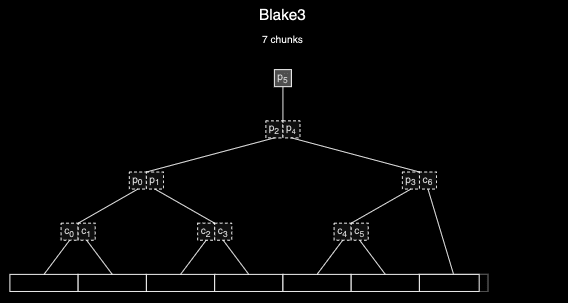

The BLAKE3 hash function is a tree hash function. Briefly, the data gets divided into 1024 byte chunks, and each chunk is then hashed individually.

To compute a root hash, pairs of hashes are combined with a binary combine function until just a single hash remains. To provide domain separation, only the final root hash gets computed with a special flag is_root set to true.

Why does iroh-blobs need internals access

The iroh-blobs protocol allows for verified streaming of data, just like the bao crate. In order to do so we need to be able to compute intermediate results of the computation described above.

Most users are only interested in the root hash, and won't ever have to deal with intermediate results.

Minimal internals API

At a minimum, we need the ability to hash individual chunks, as well as the ability to combine chunks to build the binary tree up to the root.

Previously, the BLAKE3 crate provided exactly this functionality in an undocumented and unstable guts API that was also used by the bao crate.

A function parent_cv provided the ability to combine two non-root hashes (also called continuation values) to another continuation value or root hash.

pub fn parent_cv(left_child: &Hash, right_child: &Hash, is_root: bool) -> Hash

And the ChunkState struct provided a builder-like API to compute a (root or non-root) hash for any chunk.

Why the fork?

One reason for the very good performance of the BLAKE3 hash function is the ability to compute chunk hashes in parallel. BLAKE3 is using instruction level parallelism (SIMD) to the greatest extent possible, and on top of that optionally uses thread level parallelism using the rayon crate.

When computing a hash from a large blob, the Hasher can perform chunk hash computations in any order, and it makes use of this to use SIMD.

But if you only ever ask the poor thing for hashes of individual chunks, there is nothing it can do!

So to get the benefit of this awesomeness, we need to give the hash function multiple chunks to work with, even when computing subtree hashes.

iroh-blobs works with chunk groups of 16 chunks. When sending or receiving data, the most expensive hashing related computation going on in iroh-blobs is computing the hash of a subtree consisting of 16 chunks.

You can of course compute this sequentially using the primitives exposed by the guts API. But you only benefit from the parallelism of BLAKE3 if you give all chunks to the hasher all at once. This is exactly what our fork does. it added a fn to the guts api to hash an entire subtree:

pub fn hash_subtree(start_chunk: u64, data: &[u8], is_root: bool) -> Hash

But using and maintaining this fork caused quite some complexity. There were build issues due to symbol collisions when people had both the original BLAKE3 crate and our fork in their dependencies. And we did not stay up to date with the improvements of the upstream crate.

The new hazmat API

The biggest change of the new hazmat API compared to the guts API is that this is a public API that has the same stability guarantees as anything else in a 1.x rust crate.

There is a convention in the rust-crypto collection of cryptography crates to name such useful but potentially dangerous APIs hazmat APIs, hence the name.

To hash subtrees, the hazmat API has an extension trait HasherExt that allows setting the input offset on the standard Hasher. In addition, it adds the ability to finalize the computation to a non-root hash or chaining value.

The ability to combine two non-root hashes or chaining values is given using two functions merge_subtree_root and merge_subtree_non_root.

A change from the old guts API is that chaining values are now a separate type alias to distinguish them from root hashes.

There are also a number of functions to use a BLAKE3 hash as an extendable output function, which we don't use in iroh-blobs.

Despite this attempt to make the distinction between chaining values and root hashes more clear, it is still very easy to use the hazmat API in a way that does not result in correct hashes. Hence the large disclaimers in the docs. So be careful!

Other forks

Due to it's useful tree structure, high performance and built in parallelism, the BLAKE3 hashing function is quite popular. Several other projects like fleek or fluence also forked the BLAKE3 crate, often for very similar reasons.

These are just the public forks from crates.io, there are probably more private or less visible forks. For example, the s5 project also uses BLAKE3 with chunk groups, but with a larger chunk group size than iroh-blobs.

I hope that some of these other projects will take a look at the new hazmat API and will possibly be able to retire their forks as well!

Thanks to Jack O'Connor for BLAKE3 and for providing the new hazmat API!

To get started, take a look at our docs, dive directly into the code, or chat with us in our discord channel.